It just refuses to give you the performance you expect to…

Here is a sample of some interesting charts:

Analyzing the %time in GC metric revealed that some processes spent as much as 50% of their time doing GC.

Analyzing the %time in GC metric revealed that some processes spent as much as 50% of their time doing GC.

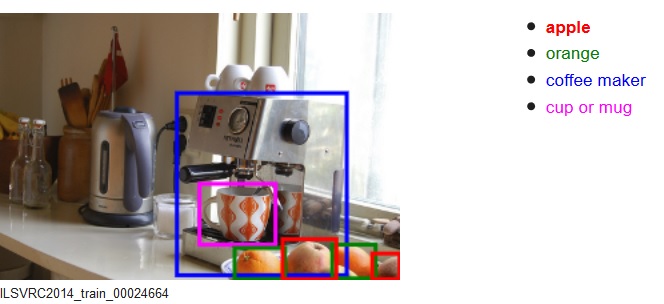

Basic systems can be created from off the shelf components allowing solving in a relative easy task problems of detection (“what is the object appearing in the image?”), localization (“where in the image there is a specific object?”) or a combination of both.

Above: Two images from the ILSVRC14 Challenge

Most systems capable to create product level accuracies are limited to a fixed set of different predetermined concepts, and are also limited by the inherently assumption that a representing database of all possible appearance of the required concepts can be collected.

The above two limitations should be considered when designing such a system as concepts and physical objects used in everyday life may not be easily fitted to these limitations.

Even though CNN based systems that perform well are quite new, the fundamental questions outlined below relate to many other Computer Vision systems.

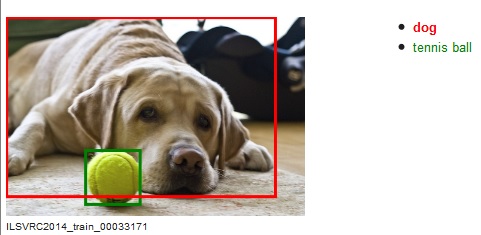

One consideration is that some objects may have different functionality (and hence a name) whereas they have the same appearance.

For example, the distinction between a teaspoon, tablespoon, serving spoon, and a statue of a spoon is related to their size and usage context. We should note that in such case the existence and definition of the correct system output is highly depending on the system’s requirements.

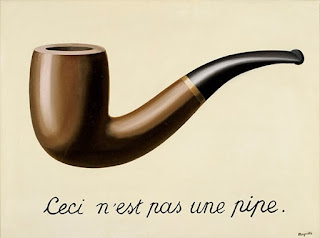

In general, plastic artistic creations, raises the philosophical question of what is the shown object (and hence the required system’s output). For example – is there a pipe shown in the below image?

When defining a system to recognize an object, another issue is the definition of the required object. Even for a simple daily object, different definitions will result in different set of concepts. For example, considering a tomato, one may ask what appearances of a tomato are required to be identified as a tomato.

Clearly, this is a tomato:

But what about the following? When does the tomato cease to be a tomato and becomes a sauce? Does it always turns to a sauce?

Since this kind of Machine Learning systems learns from examples, different systems will behave differently. One may use all examples of all states of a tomato as one concept, whereas another may split it to different concepts (that is, whole tomato, half a tomato, rotten tomato, etc.). In both cases, tomato that has a different appearance and is not included in none of the concepts (say, shredded tomato) will not be recognized.

Other daily concepts have a meaning functional (i.e. defined by the question “what is it used for?”) whereas the visual cues may be limited. For example, all of the objects below are belts. Except for the typical possible context (possible location around the human body, below the hips) and/ or functional (can hold a garment), there is no typical visual shape. We may define different types of belts that interest us, but then we may need to handle cases of objects which are similar to two types and distinctively belongs to one type.

Other concept definition considerations that should be addressed may be:

Written by Yohai Devir.

At PicScout, we use .NET Compiler Platform, better known by its codename “Roslyn“, an open sourcecompiler and code analysis API for C#. (https://roslyn.codeplex.com/)

The compilers are available via the traditional command-line programs and also as APIs, which are available natively from within .NET code.

Roslyn exposes modules for syntactic (lexical) analysis of code, semantic analysis, dynamic compilation to CIL, and code emission.

Recently, I used Roslyn for updating hundreds of files to support an addition to our logging framework.

I needed to add a new member in each class that was using the logger and modify all the calls to the logger, of which there were several hundreds.

We came up with two ways of handling this challenge.

After reading a class into an object representing it, one possible way of adding amember is to supply a syntactical definition of a new member, and then re-generate back the source code for the class.

The problem with this approach was the relative difficulty of configuring a new member correctly.

Here is how it might look:

Generating the code:

private readonly OtherClass _b = new OtherClass(1, “abc”);

Another option, which is more direct, was to simply get the properties of the class and use them.

For example, we know where the class definition ends and we can append a new line containing the member definition.

Here is how it looks:

Get class details: Insert the new line (new member):

Insert the new line (new member): After that, replacing the calls to the new logger is a simple matter of search – replace.

After that, replacing the calls to the new logger is a simple matter of search – replace.

Recently we started to expose some of the API’s abilities in public websites.

For example, see the PicScout Search Tool on www.picscout.com(and press the “Launch Tool” button).

Here’s the issue: Exposing the key to unknown users can make us vulnerable to spam and abuse.

In order to overcome this problem we decided to use Google reCaptcha.

Enjoy 🙂

But, how can we manage dependencies between Jenkins jobs that are based on .Net code?

Of course, we can manage job dependencies manually, marking in each job what are its dependent jobs, this takes a lot of time to maintain and is also error prone.

(we are still working on this one)

Hi all,

PicScout is looking for top notch SW engineers that would like to join an extremely innovative team.

If you consider yourself as one that can fit the team, please solve the below quiz and if your solution is well written – expect a phone call from us. You can choose any programming language you like to write the code.

Don’t forget to also attach your CV along with the solution.

Candidates that will finally be hired will win a 3K NIS prize.

So here it is:

We want to build a tic–tac–toe server.

The server will enable two users to log in. It will create a match between them and will let them play until the match is over.

The clients can run on any UI framework or even as console applications (we want to put our main focus on the server for this quiz). Extra credit is given for: good design, test coverage, clean code.

Answers can be sent to: omer.schliefer@picscout.com

At Picscout, we use automated testing.

Running tests is an integral part of our Continuous Integration and Continuous Deployment workflow.

It allows us to implement new features quickly, since we are always confident that the product still works as we expect.

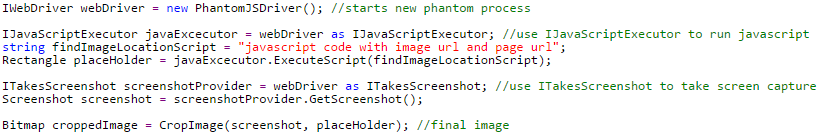

The automation tool that we are using is Selenium (Selenium is an open source set of tools for the automatic running of browser-based applications).

Despite the many benefits of Selenium, there is one drawback that constitutes a bottleneck: the running time of the tests.

For example, in one of our projects, we need to run 120 UI tests, which takes 75 minutes – a long time to wait for a deployment.

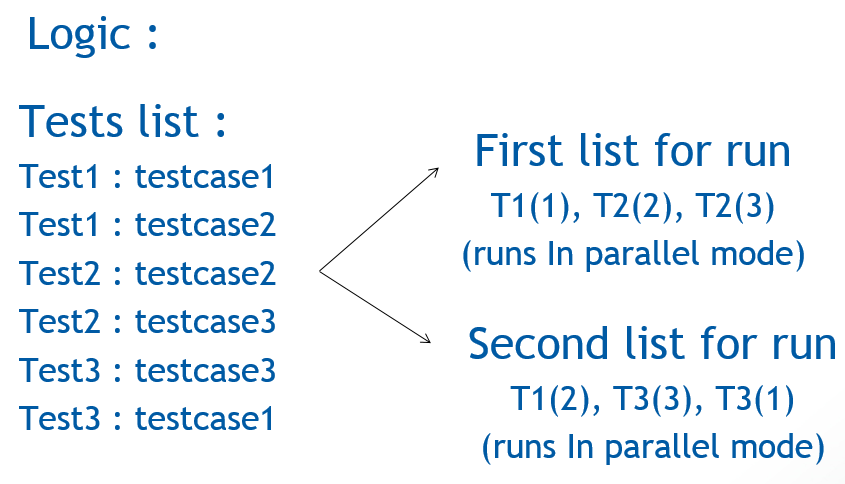

To handle this situation, we developed a tool that runs the tests in parallel mode.

Using this tool, we were able to reduce the run time to 12 minutes of tests.

How it works:

Two tests can be run in parallel mode as long as they are not affected by each other.

We avoid writing tests that use and modify the same data, since they cannot be run in parallel.

The assumption is that two tests can be run in parallel mode if each test has a different id

That’s how we run UI tests these days.